Tags

I have previously written about the powerful ETL (Extract, Transform and Load) functions that are now in Excel Office 365 desktop edition. We have seen how we can combine tables and unpivot data (Excel articles). In this article I will show you how to create a history table for imported data.

It is very common that you want to import data every day and keep it for future reference. It is easy to set up an import but unfortunately Excel always overwrites the old data with the current records. You might think that you could append data, after all there is a query type call append. However this does not append data to an existing table.

This problems has been discussed by Dirk Verliefden and Chris Dutch and others on Technet and I have built on their solution.

The trick is to create two tables

- A table bound to a query that gets the current data

- A table that appends the current data to itself

We cannot achieve this directly but can if we create a temporary table first.

Step 1: Create a table for your current data

Use Get and Transform Data on the Data Tab in the normal way.

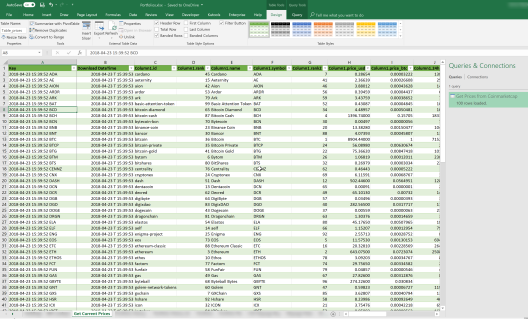

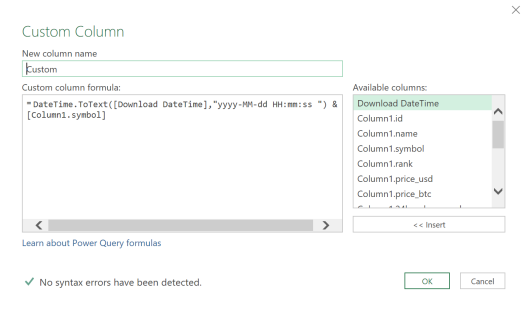

You must make sure it has a key column. I use one derived from the current data and time as shown below. My table is called “Table_prices” (top right).

Step 2: Copy these data values into a new tab called “temp”

Step 3: Turn this into a new table

Rename this table (top right) to “table_temp”.

Step 4: Create a new query working against this table (this will be our final query)

Doing this will create a new worksheet which will be our final history table. Rename it History_Worksheet.

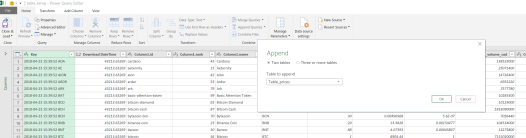

Step 5: Edit the query to append the current data

At the moment this is normal query. We will edit it and convert it into an Append query Home > Combine > Append. In my case the data I download is in Table_prices.

Save this query and view your worksheet History_Worksheet. It will now contain rows from both your original table and your temporary table.

Rename this new table “table_history”.

Step 6: Hack the query to use the history table instead of temp

Edit the query again. After the editor opens you click the Advanced Editor option (1) and look at the code (2). In line 2 it says that the Source for this table is “table_temp” and that this should be combined with the original data query, in my case “Table_prices” (line 4)

We replace “table_temp” in line 2 with the name of our new history table “table_history”. Now when this code runs it will use the history table as a data source instead of the temp table.

Save.

We have now achieved our goal. Each time we refresh the table additional rows will be added from the current data table.

Step 7: Delete the “temp” worksheet.

The worksheet temp is no longer required and should be deleted.

Step 8: Tweak the columns

You can now tweak both queries to get the correct columns. Common tasks include adding additional keys for use in VLOOKUPs and removing blank or duplicate rows.

I hope you find this post useful. I welcome both praise and comments below – JB

Thank you!!!

Hi James. Sounds cool. I download many times but like to store only the download from 1. of the month. Can this be achieved? I’m fairly new to power query, so have no idea.

Thanks, Daniel

Dear Daniel, I suggest that you simply make two copies of your download query. Run the daily download every day and then run the monthly with the archive hack on the first of each month. James

Thank you for this post. It is very useful and I was able to use it in Excel. However, in PowerBI, when i perform step-6, it generates an Cyclic Redundancy error since the query source is is the table that the query writes to. Any thoughts on working around this?

I have never encountered this error, so I had to look it up. Then I took another look at your question.

Your problem is that you’re trying to implement an Excel-specific kludge to Power BI. This works in Excel because it reads from and dumps data to a table in the spreadsheet. There is no way to store the data permanently in Power BI. If you adjusted a query like this in Power Query (in Excel), you would probably get a similar error.

Table name and query name are often similar or even the same, but they are functionally very different things.

(I am the Chris Dutch in question, if you’re curious.)

Thanks for the feedback

I am sorry but I am not a PowerBI user and so I cannot help with this problem. Good luck and please update this thread if you find a solution. James

Hello JB,

I am immensely grateful for your posting this step-by-step solution since I equally struggled to implement Dirk/Chris’ guidance which is sketchy at best.

In actual fact, yours seems to be the only actionable/cost-effective advice on incremental loads which I have come across in my googling.

Thanks a ton again and maybe one day I’ll get a chance to reciprocate 🙂

Cheers,

Nick

Your are very welcome. Good luck.

I am grateful for this step-by-step. It seems to address exactly what I am attempting to do.

I do, although, have one issue. I have the need on occasion to add lines to table that do not originate from my downloaded data. (i.e. Downloaded data might contain a single line that I need to split into two lines for assign portions of the amount to separate accounts). When I add lines to my History table, they are removed whenever I refresh with new data. Am I missing something or do I need to develop another method of adding “new” lines?

Dear Aaron, Thank you for your comment. The History Table is a target range for an automatic update and therefore like all such target ranges cannot be manually edited. You will need to develop a new method of adding lines. You may wish to consider creating putting them in a table “table_new_lines” and then doing a union query between “table_history” and “table_new_lines”. This will produce a new table, “table_history_with_new_lines”. Good luck, James

Thank you for a great solution! I was able to use this to solve a problem I am working on. I also would love to hear if a solution is found to the Power BI side of it that jp mentioned.

Thank you for your kind words. I will keep an eye out for the Power BI fix and update this thread if I see one.

When your History Worksheets updates/appends the current info, does it overwrite existing entries? Or does it simply append? If it appends, then what are your thoughts on preventing double entries? My immediate thought is: In my case the data I’m pulling updates in 1 hour increments, so I’m thinking I just set my auto-refresh for 4 hours and pray it captures everything… I can see where lags in the websites updates could cause some info to be missed, but its the best solution I have.

This recipe solves the overwriting problem. Any new rows should have a unique key; if your source does not provide a key then you can create a new column using the current time. eg. “201908190950”. This will ensure that your history table can be sorted and filtered correctly. I hope this helps.

I’m really struggling to follow this! could you make it a little clearer? you mention tables but can you ref which are power query tables and excel? same for queries and worksheets…??

At Step 1 we are using the “Get and Transform Data” menu. This will get our data and create a table in Excel with a key column. I use the Table Tools tab to name my new table “Table_prices”.

At Steps 2 and 3, I create a new Excel table with this data. Nothing clever here

We now have two Excel tables with the same data. The first is bound to our data source. The second is just a temporary table that we are going to use to generate some suitable power query code.

At Step 4. We use Get and Transform Data to create a 3rd and final table from this temporary table and name this new table History_Worksheet. This will contain our history.

The following two steps change the code that generates this table. First we make it into an Append (Union) query, and then we hack it so it uses itself as a source rather than the temporary table. The first table is used to import new rows, and these are then added to this final table.

I hope this helps. Excel has change a bit over the last year so look at the images very carefully to see the menu names and options.

I have tried this several times. Each time I refresh my history table it duplicates what is there and pulls in all the data from my source.

For example, I have 400 rows on my history and 100 on my source table. When I refresh the history, it loads 500 rows. Refresh again it loads 1,000, etc.

I think you may have got two of the tables the wrong way round in your code. Try using exactly the same names that I have and exactly the same code. Good luck.

Thanks for the fast reply.

I found that if second query is a connection only, then the correct number of rows updates consistently.

However, the table still refreshes all rows so I can’t edit the historical previous rows.

If it helps explain what I’m trying to accomplish, I have an Excel workbook that automatically imports bank transactions periodically throughout the day.

I am categorizing the transactions and renaming some of the vendors to clarify what they are.

That’s for me the case as well – i also tried it many times and more duplicate rows keep showing up

This is likely because you’re doing a full read of the data from both your history table and your data source and merging them in your query. This only works if you’re building a table incrementally.

My use case when I did this 5-6 years ago was that I had a waterfall report that pulled data from Salesforce, and rather than try to query the full DB every Monday morning (~1.2M records IIRC, of which maybe 5% were relevant, filtered with some complex logic), I ran a report for just the previous week and appended it to the existing table.

Great Help

So this is a great technique, and I think it could be the base to solve one of my working issues. I have a set of data, it include transaction lines, each assigned to a category. There are approximately 100 categories. I have created a group by query and table that groups the categories. I want to assign the categories to types so I can build aggregated reporting using the type. Categories are periodically added, so I can’t use it statically, so I want to update the categories list each time I run the query. But the type that I manually edit in the table is not persistent. Any thoughts on how to add data columns that can remain persistent, even when updated? Thanks!

I have not been working with Power Query recently so unfortunately I don’t have any ideas. If you do find a solution please update this thread for the benefit of others. Good luck – James

Excellent, thank you!!!! Saved me a lot of trouble!

Hi James,

Thanks for the solution to this, as I was reading the previous comments on this thread I could feel first hand how this is a common issue found by several people, and it was also my case.

I’d say that this solution is a huge improvement on what I had before. The report I wanted to create was not practical at all if I had to do it manually, so I implemented it using Power Query in Excel, so as days went by I was having a very hard time trying to update this report, due to the hourly updates of the base data and the awfully long refresh times spanning 1h to 2h every morning when refreshing my report.

Then I found your solution and a couple of days after having it implemented, I can refresh the database hourly taking only a couple of seconds for each refresh.

Thank you and I hope to reciprocate as well.

That’s really good! Thanks for that. Can you see a way to implement this in PowerBI ?

Hi that’s really good! Can you see a way to implement this in PowerBI ?

Oddly, I am not a PowerBI user because as a CTO I tend do more quick and dirty analysis in Excel but if you do find a solution please do post it in this thread.

No.

Hi ! Awesome work!

I managed to make it work but everytime I refresh my table, all the new inputs are added in the history, including the ones that were previously already here, creating doublons.

I believe that’s because I did not create a “key column” properly.

Basically my columns are: date of the quotation and price of the quotation.

A new price is set for every new day.

Could you please guide me on how to define my column with the date as a key so that everytime I refresh, only new dates are added to my history?

Thanks a lot!

Regards.

First decide on your unique key in the source data. If your source has the columns “Product_Id”,”Product_Name”, “Price” then your key in the source would be “Product_Id” because this appears 0 or 1 times in every daily feed.

You can see that if we simply imported the same data every day our resultant data set would not have a unique key because we would have many rows with the same Product_Id. To stop this happening when we import at Step 1 above we use today’s date and time. So all data imported today would have the key

* {Date and Time of Import}-{Product_Id}.

An example of a key with this format imported now might be

* 2020-05-23-09-39-02-bike00089.

I hope this helps.

Thank you for your quick answer.

I do understand the logic behind establishing the key column to avoid doublons, and I believe that the “date of quotation” could be a good unique key as the table is only composed of the price (of one stock) and the date (of the corresponding price).

Only two parameters like this:

https://www.casimages.com/i/200523065414391158.png.html

Therefore each day, we do have a new unique date data in the “date column” with a new price linked so I do not have to create a new column for the key, am I right ?

If yes, please could you help me to find out why do the previous rows, already in history, still get added again each time I reload ?

Thanks a lot for your support.

In each Table (new & old) must add new Column with “Date report”. In future it will be your KEY & and you may exponded by them

It sounds like a coding error. I suggest that you start again with a new workbook and follow the instructions above exactly. Good luck.

When refreshing the history data table with the new source data, is it not possible to append new (rows which have a new “Key” reference) and update existing rows the history tables rows with new data (where the “Key” is matched in both tables)?

Currently, when I refresh, the new source data is appended regardless if a line, including key, is an exact match for other rows currently in the table.

Good question. No – you cannot update rows in a _history_ table. If you want to report on present values then you should create a pivot table that uses the history table as its source and returns the appropriate records. I am sure there are other ways you could solve this problem but this post is about history tables. Good luck, James

This has been a massive help to me personally and consequently improved some of the excel tools that my team use.

Thanks, I appreciate the time and effort you put into this post ^^

All the best 🙂

If you needed to edit values from the historical record, because they were wrong in the initial input, how will you modify them? Normally, this is done by editing the data source, but how will this be done, if the data source is not accessible like in this case? Any ideas?

You will find that there is a simple copy of the source data in your history table. You can simply edit it in place.

So maybe this will work for this challenge, but I am not sure; so looking for some guidance.

I have a data set, a list of products. I read the file and import it into my data model and a table. In the data table I then assign each product to a summary category, for example, each of the 200 product ids are assigned to one of 10 product categories. When i refresh the table from the raw data (its not loading only new, its actually a rebuild based upon the product ids from a list of transactions). How can I retain the summary codes already assigned so I can only update for the new ids from the transactions?

Well your problem is not a good match for the title of the post “How to load only FRESH rows…”. Perhaps you should filter your import so you only import fresh rows. Alternatively and off-topic for this post it may be that your Summary Codes should be a lookup and not a literal value. They would then simply update (to the same value) when you refreshed the data.

Excellent work around!

I used a query before step one to pull data from a data entry worksheet, then used your hack to make a designated worksheet for historical values.

Any thoughts on how to set these queries to run automatically once a day or once a week?

I note that you can set a dataset to refresh when a file is opened. So you could manually open the file or perhaps write a windows scheduled task to do it. That is a very 2010 way of doing it. The 2020 way would be to use Microsoft Flow (Power Automate). You would store the file in OneDrive (actually Sharepoint) and create a task. I have only just started using these tools but they are clearly the future for power users like us and will replace the scripts we use to run operational processes. If you have a go and can do it then do post back here!

Hello,

I’ve been trying to do this with PowerQuery and following the steps… my key is based on “Date” only which “yyyy-MM-dd”, and every time I append the query it duplicates the records… not sure why though. This is exactly what I was looking for but duplicates rows from temp table into history table are giving me a headache… any help is appreciated.

Hi PQPQ,

I have been reviewing other comments in this thread and I note that other users have suffered similar issues but unfortunately they have not posted how they fixed them.

I note that you have chosen not to include the seconds in your key. Therefore obviously if your run the same query twice in a day you will see duplicate records in the history table. I suggest that you add seconds to the key which will allow you to run the query as many times as you like and still have unique records. Alternatively you could add a query step to remove duplicates. Good luck.

“Alternatively you could add a query step to remove duplicates” – whoops. Actually I should have said “An additional query on the history table that removes duplicates”.

you crazy sob … you did it ! … cant believe that excel requires such a workaround for a simple operation (vs 1 line of code in R). spent several hours searching the web for this solution until i saw yours. thanks for sharing and where is the tip button for your pint mate?

You are welcome Adam. I really do it for the fame and glory but a pint would be very welcome (https://www.paypal.com/paypalme/JamesBayleySE4?locale.x=en_GB)

This looks like it will be just what I need! But I can’t see the images…not sure if it’s because of lousy internet speed at my office, or if those links are broken… 😦 I’m going to try to follow the steps without the illustrations, but it would be awesome if they were visible!

They are there for me! I have sent you a PDF.

Thanks for the method. So useful guys like you post this out there. Saves hours of tinkering and effort, especially when, like I do, work in Power Apps, BI & Excel.

Thanks for the feedback. It is nice to be appreciated.

Excellent presentation. I have reviewed other examples on the web both video and posts and I found your answer to be the most easily understood. I used your example and successfully created a history table. Thank you.

Thank you for the feedback. It is nice to know I helped.

James, its been a while since you wrote this article, but I can vouch that its still relevant and in use by me at least! Many thanks and well done. I was wondering are you aware of any recent MS updates which have eliminated the need for these steps? Right now I use your instructions to create the historic data and then draw the contents to Power BI via yet another Query where I visualise and share the historic data with stakeholders. Clearly this is a very manual exercise, but I have yet to find an alternative daily update methodology which simplifies the entire process (in particular, the historic update with daily data). Any advice or alternative courses of action much appreciated.

Thanks again, Sean.

Hi Sean, I think that this post is still the top resource on the web for this issue which indicates that there has not been progress. I am not using this technique at the moment because the data source changed and broke my implementation. It is a brittle solution at best. I will update this page if l learn more.

Works for me, thanks. If all data ever likely to be appended is distinct then I can dispense with a key column?

It is a long time since I wrote this post so I will just answer from general principles. Key columns are good things but if you want to remove it simply create an additional query that transforms the History Table.

That’s really great, thank you. The “hack the query” part at the end was the exact thing I needed. You might add that the query should filter out duplicates in the key column at the very end.

Why are you running a full query every time you run this? That entirely defeats the purpose of running an incrementally updating table?

I have a query for the history table, and a refresh query from an external data source with some transformations that I am appending to the history. The refresh imports data that is exported manually, which leaves room for human error. In case the selected dates overlap for two exports, I need to sort out duplicates. That way one can just select a generous timeframe (like 10 days instead of 7) and does not need to look up the exact date of the newest data first.

Plus, if someone hits refresh twice, the data from the refresh query will be appended twice.

The duplicate removal is just a failsafe. I won’t be the only one using the queries 🤨

Hi, this is very useful. But what happens when the historic table exceeds 1m rows of data? how do I scale this for large datasets? our monthly transactions now exceeds 1m rows

any help much appreciated

Local data storage is Excel is really for traditional spreadsheet operations. When dealing with large amounts of data Excel is typically used as a reporting tool that can draw pivot tables and charts but the heavy computation is done by a SQL Server (say). In your case you may wish to consider how you can store your daily data in your database server and then provide visualisation tools using Excel. Although I have never done it I know that Excel can connect to cubes produced by database servers so I am confident that “Excel for visualisation, database for storage and computation” will work for most challenges.

Yes, absolutely, use a different tool.

Now assuming for whatever reason you can’t:

Split the history table into separate tabs. Presumably you’re not updating the history all that often, just appending new data to your existing data set. You’ll have to then build the table in the data model by appending the tables in the tabs together, and depending how often your table is going to exceed 1m rows, you’ll probably want to think about using VBA in conjunction with PQ to make this easier to maintain.

None of this is good practice, but the people in charge of the checkbook often didn’t care about that. So abuse Excel if you absolutely have to, and lobby hard for a dedicated database solution until you get one.